On the one hand, banks can consolidate their capital strength and improve their ability to resist risks; On the other hand, it will help to improve the capital adequacy ratio and increase support for the real economy. In the future, small and medium-sized banks may get differentiated policy support in issuing preferred shares and perpetual bonds — —

Recently, with the continuous support of the policy, the issuance of capital supplementary bonds by small and medium-sized commercial banks has accelerated, and the demand for bond issuance by large commercial banks has also rebounded near the end of the year. The latest data shows that the number and scale of bonds issued by commercial banks increased significantly in November, and the total amount of bonds issued remained at a high level. In terms of issuance structure, commercial banks issued a total of 21 bonds in November, including 4 perpetual bonds and 9 secondary capital bonds.

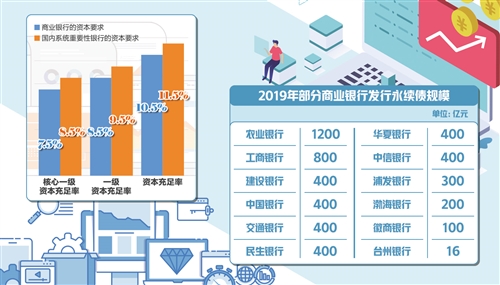

Recently, Taizhou Bank and Huishang Bank successively issued perpetual bonds in the national inter-bank bond market. Among them, the first bond issue of Taizhou Bank is 1.6 billion yuan, which is the first perpetual bond issued by a city commercial bank in China. According to statistics, the issuance scale of perpetual bonds, subordinated bonds and convertible bonds of large, medium and small banks has increased significantly this year compared with previous years.

Banks are enthusiastic about issuing bonds.

In January this year, China Bank publicly issued 40 billion yuan of open-ended capital bonds in the inter-bank market, which filled the gap in the perpetual bond market of commercial banks in China.

The successful issuance of perpetual bonds by China Bank provides a model for subsequent commercial banks to issue non-fixed-term capital bonds, and also broadens the channels for commercial banks to supplement other Tier 1 capital instruments. Subsequently, Agricultural Bank of China, Industrial and Commercial Bank of China, Shanghai Pudong Development Bank, Huaxia Bank, Minsheng Bank, China CITIC Bank, etc. also successively issued perpetual bonds, among which Agricultural Bank of China issued the largest amount of 120 billion yuan.

Perpetual bond refers to a kind of bond issued by financial institutions and non-financial enterprises, which does not stipulate the maturity period, the issuer has no obligation to repay the principal and the investor can obtain interest permanently according to the coupon interest. When the issuer goes bankrupt, the order of repayment is after depositors, general creditor’s rights and subordinated debts.

"For banking financial institutions, especially small and medium-sized banks, there is a great pressure to replenish capital at present. On the one hand, banks can consolidate their capital strength and improve their ability to resist risks; On the other hand, it will help improve the capital adequacy ratio and increase support for the real economy. " Wen Bin, chief researcher of China Minsheng Bank, said.

There are two main channels for bank capital replenishment: one is endogenous funds that rely on their own profits; The other is through IPO and issuing capital to supplement bonds and other exogenous channels. Dong Ximiao, chief researcher of Xinwang Bank and distinguished researcher of National Finance and Development Laboratory, said, "China’s commercial banks have long relied on common stock financing as the main way to supplement foreign capital. Considering the current market environment, relevant departments in China should increase policy support, encourage commercial banks to expand other tier 1 capital instruments and qualified tier 2 capital instruments, such as preferred stocks, perpetual bonds and convertible bonds, and establish a long-term mechanism for capital replenishment. "

At the same time, with the slowdown of the banking industry’s profit growth, the increase of provision requirements and the limitation of endogenous capital replenishment, it is more urgent and necessary to implement exogenous capital replenishment. "The issuance of perpetual bonds and preferred shares by banks are innovative capital replenishment tools, which not only helps to enhance the banking industry’s ability to serve the real economy, but also helps to optimize the bank’s capital structure." Wen Bin said.

Small and medium-sized banks receive policy support.

"Focus on supporting small and medium-sized banks to replenish capital", "At present, focus on supporting small and medium-sized banks to replenish capital through multiple channels, optimize capital structure, and enhance their ability to serve the real economy and resist risks" and "enhance the capital strength of commercial banks, especially small and medium-sized banks through multiple channels" … … From the eighth meeting of the State Council Financial Stability and Development Committee held at the end of September to the ninth and tenth meetings held in November, the supplementary capital of small and medium-sized banks was mentioned.

Wen Bin pointed out, "For small and medium-sized banks, the pressure of replenishing capital is greater and the demand is more urgent. Recently, the regulatory authorities have issued a series of policies to encourage and support small and medium-sized banks to replenish capital through various channels, including encouraging qualified small and medium-sized banks to go public for financing and lowering the threshold for small and medium-sized banks to issue preferred shares and perpetual bonds." In his view, for small and medium-sized banks, these methods have a positive effect on improving capital strength, enhancing anti-risk ability and increasing support for the real economy.

In July this year, the regulatory authorities issued the Guiding Opinions on Supplementing Tier 1 Capital by Issuing Preferred Shares by Commercial Banks (Revised), which relaxed the restrictions on unlisted commercial banks (mainly small and medium-sized banks) supplementing other Tier 1 capital by issuing preferred shares. On November 7, Taizhou Bank was approved to issue perpetual bonds of no more than 5 billion yuan. In the eyes of the industry, this has released a major signal that the regulatory authorities support small and medium-sized banks to replenish capital. "Overall, as a supplementary tool for tier-one capital of banks, small and medium-sized banks may obtain differentiated policy support in issuing preferred shares and perpetual bonds in the future." Wang Qing, chief macro analyst of Oriental Jincheng, said.

"Since the beginning of this year, in the context of the continuous promotion of strict financial supervision, the risk release of individual small and medium-sized banks, including Baoshang Bank, has become a major concern of the regulatory authorities. In addition, represented by some rural commercial banks, some small and medium-sized banks have greater pressure on capital replenishment. " Wang Qing said.

Debt issuance will continue next year.

Capital is the ultimate means for commercial banks to absorb losses and resist risks. According to the Capital Management Measures of Commercial Banks (Trial), the core tier-one capital adequacy ratio, tier-one capital adequacy ratio and capital adequacy ratio of commercial banks are 7.5%, 8.5% and 10.5% respectively, while the capital requirements of domestic systemically important banks are 8.5%, 9.5% and 11.5% respectively.

"The proportion of other Tier 1 capital in China’s commercial banks is obviously low, and the other Tier 1 capital adequacy ratio of city commercial banks and rural commercial banks is significantly lower than the average." Ceng Gang, deputy director of the National Financial Development Laboratory, said that at present, the main ways for commercial banks to replenish Tier 1 capital include perpetual bonds and preferred shares. Affected by the increase of default events in the bond market and the rise of credit premium, there are still some thresholds and obstacles in the approval, market acceptance and pricing of perpetual bonds issued by small and medium-sized banks due to their low subject rating.

Looking forward to the market outlook, many market participants said in an interview with reporters that it is expected that the issuance of perpetual bonds, secondary capital bonds and convertible bonds by banks will continue next year. "The overall liquidity of the market will be abundant next year, and it is expected that the market interest rate will fall back, which will help reduce the cost of issuing bonds by banks." Wen Bin said that although banks with different credit ratings will have certain differences in spreads, on the whole, the market demand for bank bond issuance will remain large next year.

At the same time, Yin Haicheng, an analyst with Oriental Jincheng Financial Business Department, said that it is expected that perpetual bonds will gradually change from "emergency blood supplement" to "normal issuance" and become a routine means of bank capital management. He also suggested that in the above process, relevant laws and regulations on perpetual bonds of commercial banks should be promulgated, which should be stipulated in terms of issuance conditions, issuance and approval processes, and information disclosure requirements; At the same time, promote the participation of diversified investors, including applying to the National Social Security Fund Council for approval of social security funds to participate in investment, strengthening communication with clients, including varieties in the enterprise annuity investment list, and further liberalizing the conditions for insurance funds to invest in perpetual bonds.