Recently, Zhou Hongyi of 360 said."GPT6 to GPT8 artificial intelligence will generate consciousness and become a new species.In the future, the artificial intelligence big language model may realize self-evolution, automatic system updating and self-upgrading, or exponential evolution ability, and human beings will face unpredictable security challenges. "

Zhou Hongyi, as a "master of tuyeres", has to get involved in almost every tuyere. What he said is somewhat credible, and it is necessary to make a big question mark.

Moreover, Zhou Hongyi only gave a prediction, but did not give his own reasons and reasoning logic.

As a media that focuses on the depth of the industry, we should not stay on the slogan of the headline party, but analyze the problem from a deeper level.

Will GPT grow into a general artificial intelligence model? If so, how long will this process take? Next, we try to discuss this disturbing problem together.

To answer this question, we need to "tear down" a big model like GPT and see what is inside it and how it works.

"Unpack" the black box of the big model

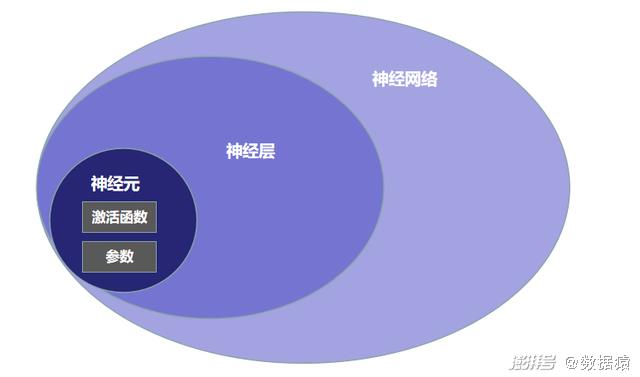

Large model refers to a neural network model with a very large number of parameters, which is usually used to deal with complex natural language processing, image recognition, speech recognition and other tasks. The core elements of the large model are mainly neural network, layer, neuron and parameters.

Data ape mapping

neural network

Neural network is a machine learning algorithm, which is inspired by the nervous system of human brain. It consists of multiple layers, each layer contains multiple neurons, and the connections between layers form a neural network. Each neuron receives the input from the neuron in the upper layer, calculates the output according to its input, and then passes it on to the next layer.

floor

Layer is a part of neural network, which is composed of multiple neurons. Each layer can be configured with different parameters such as activation function and optimizer. Usually, each layer will transform the input and then pass the output to the next layer. Common layers include fully connected layer, convolution layer, pooling layer, etc. The basis for dividing different types of layers is mainly the type of data processed, the internal structure of layers, the functions of layers, etc. For example, the fully connected layer is the simplest and most commonly used layer in neural networks. Its core function is to connect all neurons in the upper layer with all neurons in this layer. This connection allows the neural network to learn the complex nonlinear relationship of input data. Fully connected layer is usually used for image classification, speech recognition, natural language processing and other tasks; Convolution layer is the core layer of Convolution Neural Network (CNN), which is used to process data with spatial structure, such as images. Its core function is to perform convolution operation on the input data through a set of learnable convolution kernels to extract the features of the input data. The internal structure of convolution layer includes several convolution kernels and a bias term, and the output of convolution layer is usually input into pooling layer.

neuron

Neurons are the basic units in neural networks,Its main function is to receive signals from neurons in the input layer or the previous layer and generate output signals. The inputs of neurons are transmitted through a set of weighted connections and are weighted and summed in neurons. Then, this sum is input into the activation function for nonlinear transformation to produce the output of neurons.

The core of neuron is activation function, which is the core component of neuron processing input signal. Neurons receive input signals, sum them with weights, and then input them into the activation function for nonlinear transformation.The function of activation function is to introduce nonlinear factors into neurons, so that neurons can learn nonlinear models, thus improving the expression ability of models.

parameter

Parameters refer to variables in the neural network, which will be updated with the training of the neural network.Each neuron has a weight vector and an offset term, which are usually called parameters.The values of these parameters will be updated by the optimizer during training to make the output of the neural network as close as possible to the expected output.

To sum up, neurons are components of layers, and layers are components of neural networks. Parameters are stored in neurons, and each neuron has a set of parameters (weights and offsets). During the training process, the optimizer will update these parameters to make the output of the neural network as close as possible to the expected output.

So, how do neural networks, layers, neurons and parameters work systematically?

Next, we take the training process of GPT-4 as an example to illustrate the cooperation process of different elements in the training process of large model, specifically:

1) Firstly, all parameters of GPT-4 model are randomly initialized, including the weight and deviation of each neuron in the neural network.

2) Prepare data sets, which can be marked texts, such as articles, novels, papers, social media posts, etc. Before training, data must be preprocessed and cleaned up, such as deleting special characters, stop words and other irrelevant information.

3) Training data will be divided into several small batches, usually each batch contains hundreds to thousands of text samples. Input each batch into GPT-4 model, and the model will calculate the output according to the current parameters, that is, predict the probability distribution of the next word.

4) Calculate the loss function of the model, which can reflect the performance of the model on the training set. In the case of language model, cross entropy is usually used as the loss function.

5) Calculate the gradient of parameters according to the backpropagation algorithm, and the gradient reflects the change rate of the loss function of the model at a certain point. Then use the optimizer to update the parameters of the model to reduce the loss function.

6) Repeat steps 3-5 until the performance of the model reaches the expectation or the training time is exhausted.

Kind of like the human brain.

From the above analysis, we can see that the big model is trying to simulate the working mechanism of the human brain. In fact, at present, human beings are the only intelligent creatures. If a large model is as intelligent as human beings, "bionic" is the best way.

Next, let’s briefly sort out the structure and working mechanism of the human brain, and then compare the structure and working mechanism of the big model with that of the human brain.

Let’s first look at the structure of the human brain.

The human brain is a neural network system composed of tens of billions of neurons, and neurons are the basic units of neural networks. Each neuron is connected with each other by synapses, which are the basic channels for transmitting information between neurons.

The core function of neurons is to receive, process and transmit information.A neuron usually consists of three parts: cell body, dendrite and axon.Neurons receive signals from other neurons and transmit them to the cell body through dendrites. The cell body processes these signals and generates output signals, which are transmitted to other neurons through axons.

The connection between neurons is usually realized through synapses. Synapses are divided into chemical synapses and electrical synapses. Chemical synapses transmit signals through neurotransmitters, while electrical synapses transmit information directly through electrical signals.

The human brain is composed of a large number of neurons and synapses, which are connected together according to specific laws to form different neural circuits and neural networks. These neural circuits and neural networks work together to complete various complex cognitive, perceptual, emotional and behavioral activities of the human body.

Through the above analysis, we can make an analogy between the human brain and various elements of the big model, as shown in the following table:

The layer in the big model can be compared with the cortex in the human brain.The layer of the large model is composed of several neurons, each neuron receives the output of the previous layer as input, and calculates it through the activation function to produce the output of this layer. The cerebral cortex is a complex network composed of neurons and synapses, in which each neuron also receives the output of other neurons as input, and transmits and processes information at synapses through chemical signals.

Neurons in the large model can be compared with neurons in the human brain.They are the basic computing units in the network, which receive input signals and process them through activation functions to generate output signals. Neurons in the human brain are the basic computing units in organisms. They connect with other neurons through synapses, receive chemical signals from other neurons, and generate output signals through electrical signals.

The parameters of the large model can be compared with the synaptic weights in the human brain, which determine the intensity and mode of information transmission between neurons. Synaptic weights in the human brain also play a similar role, which determines the connection strength between neurons and the signal transmission mode at synapses.

Quantitative comparison between large model and human brain

The above is only from a qualitative point of view, to understand the big model, the working mechanism of the human brain, and to make an analogy to their core elements.

Quantitative change leads to qualitative change, even if it is similar in structure, but the difference in quantity often leads to great difference.

Next, let’s compare the big model with the human brain from a quantitative point of view.

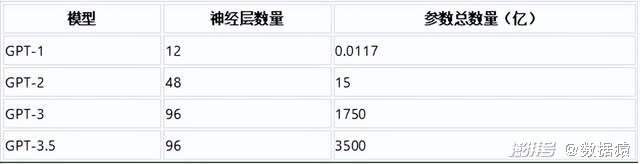

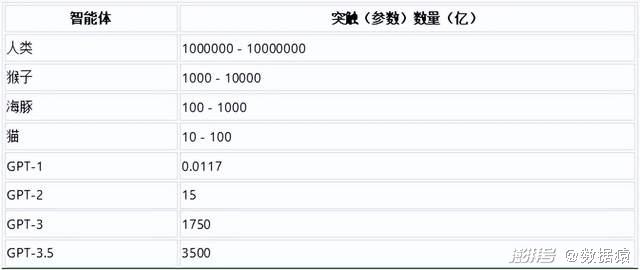

The following are the approximate values of the total number of neural layers and parameters of GPT-1 to GPT-3.5 models (the number of neurons is not disclosed, and GPT-4 does not disclose relevant data):

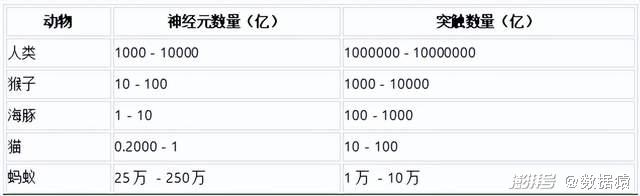

Then, let’s look at the number of neurons and synapses in the human brain. In order to make the results more referential, besides humans, we also selected monkeys, dolphins, cats and ants. The specific results are as follows:

From the above analysis, we know that the neurons of the large model can be classified as human neurons, and the parameters of the large model can be classified as synapses of the human brain. But unfortunately, the big models in the industry rarely disclose the number of neurons, but generally disclose the size of parameters. Therefore, we compare the parameter scale of GPT series large models with the synaptic scale of human, monkey, dolphin and cat brains:

From the above table, it can be seen that the "intelligence" level of GPT-3.5 has fallen within the intelligence range of monkeys, which is 285.7-2857 times worse than that of human beings.

On the other hand, from the evolution speed of GPT itself, the parameter scale can be increased by two orders of magnitude in the first two iterations, but after GPT-3, the speed of parameter scale increase is greatly reduced. Assuming that the parameter scale of GPT will increase by five times in each iteration, after five iterations (the fifth power of five is 3125), that is, GPT-9, its "intelligence level" may catch up with human beings.

Of course, the above inference is only a simple model, and there is an assumption that the intelligence level is positively related to the size of synapses (parameters). But whether this hypothesis can be established needs a big question mark.

Compared with synapses, neurons are a better indicator of intelligence. Judging from the current situation, the information processing ability of the activation function of the large model is far weaker than that of human brain neurons. The activation function of large model neurons is relatively simple. For example, the neurons of Sigmoid activation function have a smooth S-shaped curve in the output range, which can realize tasks such as binary classification, and the neurons of ReLU activation function have nonlinear modified linear properties. Relatively speaking,The neuron of the human brain is a biological cell, and its information processing ability is definitely far beyond a simple mathematical function. Therefore, even if the number of neurons in the large model catches up with the level of the human brain and reaches hundreds of billions (the corresponding parameter scale exceeds 1000 trillion), its intelligence level cannot be compared with that of the human brain.

In addition, the neural network of the large model can be compared with the neural network of the human brain, but their implementation methods are completely different. The neural network of large model simulates the connection and information transmission between neurons through mathematical model, while the neural network of human brain is a complex structure composed of neurons, synapses and other biological elements. In addition,The connections between neurons in the neural network of human brain are very complicated, and they can freely establish, dismantle and adjust the connections, while the neural network of large model is set in advance.

At present, human beings are still relatively "safe". But let’s not forget,The size of neurons in the human brain is almost constant, while the size of neurons and parameters in the large model is increasing exponentially.According to this trend, it may be only a matter of time before the intelligence level of large models catches up with human beings.

Hinton, a great god in the field of artificial intelligence, said, "The development of general artificial intelligence is much faster than people think. Until recently, I thought it would take about 20-50 years for us to realize universal artificial intelligence. Now, the realization of general artificial intelligence may take 20 years or less. "

In the face of general artificial intelligence or even super artificial intelligence, human psychology is complex. On the one hand, it is hoped that there will be more intelligent systems to help mankind complete more work and liberate productive forces; On the other hand, I am afraid to open Pandora’s box and release a monster more terrible than nuclear weapons.

I hope that even if we create a "god", it will be a god full of love, not a god who regards human beings as ants!

Text: A misty rain/data ape